Problem statement:

The end client has more than 156 cinema locations for which

the GST data needs to be consolidated for monthly GST filing. The problems that

the end client desires to solve are as follows:

·

Automation of the GST loads process.

·

Error handling and data auditing

Tasks achieved

Full load of all 156 locations:

The ETL team tried single load and 2 parallel load approach

for implementation of the same.

The performance parameters of the ETL process was gauged by

the following parameters:

·

Time of load

·

Error logging and status logging

·

Types of errors

·

Validation issues

Process flow: The end client has a set of stored procedures

running at remote locations. The procedures are of 2(two) types: 2 parameter

and 3 parameters. The ETL load accommodated both the types of stored procedures

into the final solution.

The ETL team has done an initial load for 2 parameter(only the

date from and date to are given as input parameters) and 3 parameter

locations(only the cinema_operator,date from and date to are given as input

parameters).

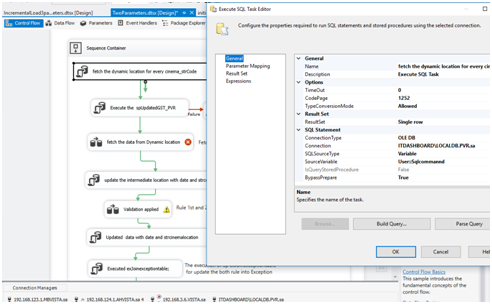

Attached is the entire process flow at the process task

level:

In the initial load package, the initial load of 2

parameters and 3 parameters happens in parallel.

Hereafter there could be some locations that are not loaded

(of 2 parameters and 3 parameters). These locations are loaded in the

incremental set up.

Condition for the incremental set up is derived from the

variable value:

@[user::count]>0 condition is given for add missing value

in data for the process is running when count Value is greater then 0.

The given variable @usercount is derived from the execution

of the sql transform as follows:

Mapping of the result output to the variable:

Package explanation of initial load :

3 parameters and 2 parameters are loaded in parallel in

initial load package.

Explanation of two parameters load

Fetch of the maximum row_id from audit_table. The table name

audit_table has all the locations with 2(two) parameter load.

Set the parameters

for loop increments.

The variable @inc is

set to 1.

@max variable has

been set to the maximum number of rows in the audit table.

For each location a dynamic connection string is used for

connecting to the remote location and extracting data from the remote location.

Fetching of connection string for dynamic location: the

audit table has the connection parameters or all locations. These connection

parameters are extracted one by one using the execute sql command.

Sqlcommand: =

" select Cinema_strCode code, Accessed_user id,

Channel_Address ip, convert(varchar(30), DECRYPTBYPASSPHRASE('8', password))

pass, SUBSTRING(tableSynonym, 0, CHARINDEX('.', tableSynonym, 1))dbname from

tblCinema_Master where Cinema_strCode in (select code from audit_table

where row_id="+(dt_wstr,4)

@[User::inc]+" );"

The data is set to result set

Dynamic Location is set to the static location by setting

the expression connection string

Con1:(setting up the connection string)

"Data Source="+ @[User::ip1] +";User ID="+ @[User::id1]

+";Initial Catalog="+ @[User::dbname1]

+";Provider=SQLNCLI11.1"+";Password="+ @[User::pass1]

+";"

Now the Sql Execute Task connection string assigned dynamic

connection.

Fetching the data from remote location using dynamic

connection string:

Updated Intermediate_Test

Validation rules: the end client asked the ETL team to apply

validation rules to check the quality of the data. the End client had input

percentages of 18,12,11,14,0 and 5. Any transactions not in the given

percentage ranges were classified as having wrong data.

Rule Apply first Check (18,12,11,14,0,5)

Checked Rule Second : this rule entailed that any entry

having 0% GST should not have total value less than -2 or more than 2.

Update the Intermediate location with covered date and

location.

Executed the exjoinexceptiontable for apply both rules.

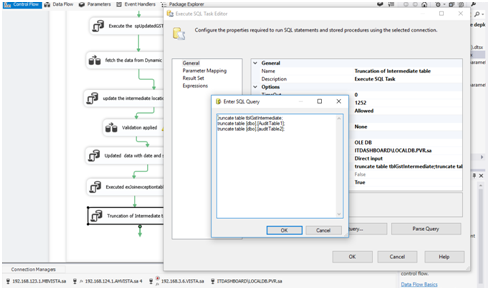

Truncated the intermediate tables

truncate table tblGstIntermediate;

truncate table [dbo].[AuditTable1];

truncate table [dbo].[auditTable2];

Incremental load

of two parameters; this module indicates the approach for 2 parameter incremental load.

The incremental load for 2 parameters was required since there could be some locations that have been missed out of the initial load due to the connection errors. In such a scenario the incremental load for 2 parameter locations needs to be done.

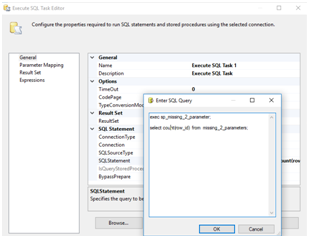

ALTER procedure [dbo].[sp_missing_2_parameter]

as

begin

truncate table missing_2_parameters;

--drop index idx_cinema_str on

tblGst_Test11;

with cte1 as(select distinct cinema_strcode from

[dbo].[tblGst_Test11])

select * into #cte1 from cte1;

create index #idx_cte1 on #cte1(cinema_strcode);

with

cte2

as( select * from [dbo].[audit_table]

where code not in (select distinct cinema_strcode from

#cte1))

insert into missing_2_parameters

select * from cte2;

--create index idx_cinema_str on

tblGst_Test11(cinema_strcode);

end;

We will find the missing location from missing_2_parameters,

and we

Start loading the data from missing_2_parameters table.

Executed the sp_missing_2_parameter for load the missing

location

exec sp_missing_2_parameter;

The find out the maximum missing_id from missing_2_parameters.

select max( missing_id)

from missing_2_parameters

Setting the value of for loop parameters

For loop will iterate till the max_inc meet.

Note :

Then we have taken sequence container

Inside taken Execute SQL task (Taking Dynamic connection

string one by one)

The ETL team replicated the same process for 3 parameter load.

Note: this blog covers only the serial execution. The process of parallel execution(running multiple dataflows simultaneously for 2 parameter data load was work in progress).

APPENDIX

Activity

1.)

Logging : the logging mode was enabled for

package data load.

1.

Set the error log for the package.

Steps:-

Set the Provider type and configure the type

of data base where to store.

Then set the event to store the error points

In case of any GST automation requirements, please contact the following:

Name: Apoorv Chaturvedi

email: support@mndatasolutions.com;support@turbodatatool.com

Phone; +91-8802466356

Website: https://mn-business-intelligence-india.business.site/