For free PowerBI dashboards elaborating the features below, contact the following:

The ETL team intended to develop a reporting solution over Turbodata with the following tenets:

- Apoorv Chaturvedi

- email: apoorv@mnnbi.com

The ETL team intended to develop a reporting solution over Turbodata with the following tenets:

·

Scalable: the

reporting solution should be used across multiple end clients. Thus it should

have the following features:

o Role management

o Migration properties

o Connectivity across multiple databases such as SAP,

Navision etc.

o Optimum cost: PowerBI is free below 1GB of data.

o Handling of reporting errors such as fan traps and

chasm traps

Implementation of turbodata reporting in the form of Dashboard

and semantic layer and relevant query implementation.

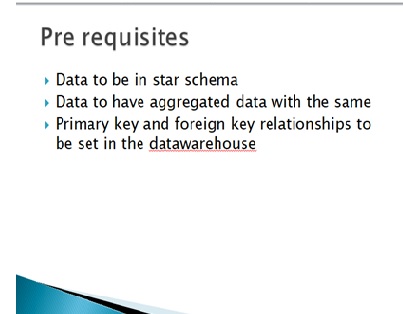

Design the semantic layer

Import

Database and their tables.

Step-2: Import option loads the data

onto local machine, direct query runs the query at database level.

We loaded Dimensions tables, Fact table and Aggregate

tables.

Manage the relationship between tables.

Developing Reports

New Report

Export data to excel

Drill down and Drill through

- } Set up a hierarchy

- } Drill down on the graph by hierarchy

- } Drill down for data points

Open chart –inventory turnover

Drill up and Drill down

Custom measures-sample

Power BI Joins Implementations

Steps:

i) Goto Edit Queries ii) Click Merge Queries

iii) Select Source and Destination Database

iv) Select the join type (left, right, full) v) Select

common column

We

applied the condition with ok.

Query

Applied Data:

Right

Outer joins In Power Bi Desktop

Steps: i) Goto Edit Queries ii) Click Merge Queries iii)

Select Source and Destination Database

iv) Select Right Outer joins IV) Select

common column

Full

Outer Join Table

Inner Join in Power BI

Output

of inner-join

Why parameters?

} Query to be executed in sql by using external inputs

◦ Report migration

◦ On fly ABC classification

Apply The Parameter inside the power BI

- Create the parameter

- Create Queries

2. Goto

PowerBI

I) Right Click on the table, click on the Advanced

Editor

We have solved the problem of Chasm trap and Fan trap by implementing the following:

I.)Union all

ii) Table alias name

iii) Decision on contexts

iv) Aggregate awareness

2. Goto PowerBI

I)

Right Click on the

Role

Management in Power BI

By the use

of Manage role, we can apply the concept security like, we can give the access permission

to specific user specific data and condition data access allowance.

Data representation on the basis of Role base

Authentication on Dashboard

Name: Aprajita Kumari

Email: aprajita@mnnbi.com

Phone: +91-9717077793

Linkedin details: https://www.linkedin.com/in/aprajita-kumari-81635548/

Alternate contact details: apoorv@mnnbi.com

Phone: +91-8802466356

Website: www.mnnbi.com