Turbo Tally Reports

The given product is for the following set of end clients:

a.) Companies looking to give out loans to other firms

b.) Companies looking to factor invoices for other firms

c.) Companies looking for financial advisory services

Background:

The given product has been developed in collaboration with top end financial consultants from Deloitte, Schlumberger. The given product has been developed to provide ease of reporting using Powerbi from desktop solutions such as Tally.

The details of the solution:

o All the reports shall be embedded in Tally

o All the reports have been designed in such a manner that direct integration with databases using

json, xml can be done with ease.

o All the reports can be exported to MS Excel

o Direct integration with PowerBI and Tableau shall be done for ease of analysis.

o The preliminary solution contains 5(five) different reports entailing master details, transaction

details and the payment/receivable ageing details.

For the same the ETL team has devised a solution that entails the following:

Get complete details of sundry debtors and sundry creditors.

Difference from Tally report:

o The given output shall be a consolidated list of sundry debtors and sundry creditors. The

output can be directly interfaced with any database (cloud or on site relational database).

Get the complete list of sales, purchase, stock journal, credit notes and debit note outputs. In the

first version the journal details (used for fixed asset purchase) have been excluded.

o

Difference from Tally:

The end client does not need to use multiple reports of sales register, purchase

register and journal register

Calculate the bill receivable and bill payable ageing details. This is required because the end client

should know the following:

o Outstanding bill payments

o Outstanding bill receipts.

Bill receivable and bill payable ageing report: this particular report captures the following movement

types:

o Sales

o Purchase

o Stock journal debit

o Stock journal credit

o Journal debit

o Journal credit

o Payment

o Receipt

For the given report, the bill reference number is required. The entries that do not have the bill

reference number shall be excluded from the report.

The formulae for the Bill payable ageing as per the product is as follows:

o Total payable outstanding ageing: Total purchase + Total journal debit + Total stock journal debit-

payment - Total debit notes

o Total receivable outstanding specification: Total sales + Total journal credit + Total stock journal

credit - receipt - Total credit notes

Level of granularity of the report: this report has been generated at voucher, allledgerentries and bill

allocations level from the Tally solution. The ageing aggregate has been generated for the consolidated

time period for which Tally has been opened by the end user.

Ageing formulae: the ageing formulae has been devised as the difference between the current fiscal

date as set by the system variable ##SVCurrentdate and the bill due date. The bill due date is the bill

date in case the bill credit period for the sundry debtor or sundry creditor is 0, else it adjusts the bill due

date as per the bill credit period.

In case of

On Account entry the following shall be the entries:

·

Due date of payment shall be the same

as Bill credit period

·

The number of due days shall be 43830.

·

‘Name’ in the audit report refers to

the bill reference number.

The final

report looks as follows:

Attached

is the explanation of the above report:

·

DATE: For the sales, purchase invoice

·

BILLID: this is the reference number between

sales and receipt, purchase and payment.

·

PARTYLEDGERNAME: self-explanatory

·

TOTAL

OUTSTANDING RECEIVABLES: to date total receivables, that is total sales

minus total receipt

·

TOTAL

OUTSTANDING PAYABLES: to date total payables, that is total purchase

minus total payments

·

Ageing: this is the number of days

between current date and the date of sales/purchase.

Note:

·

This report includes logic for credit notes,

debit notes, stock journal and journal also.

The given

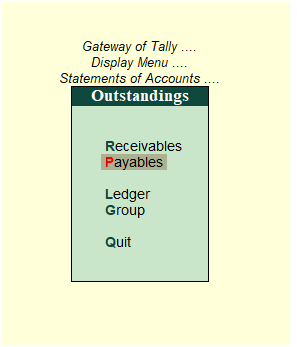

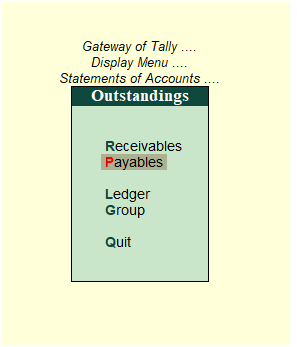

report has consolidated the following Tally reports:

Also

the given report helps give the total ledger outstandings across all ledgers.

Within Tally the end client is supposed to extract total outstandings ledger

wise

Sundry

creditors and Sundry debtors reports:

o

The report shall include all sundry

creditors and sundry debtors by looking at whether the ledger entries belong to

group sundry debtors or group sundry creditors.

o

The report shall include all outputs

as in ledger details for sundry debtors and sundry creditors.

Explanation

of each field is as follows:

·

PARTY NAME: the party to which sales,

purchase, credit note or debit note has been issued

·

ENTITY TYPE: Select Regular from Registration Types.

·

PAN: Self-explanatory from above

screenshot.

·

ADDRESS: Self-explanatory from above

screenshot.

·

PINCODE: Self-explanatory from above

screenshot.

·

STATE: Self-explanatory from above

screenshot.

·

COUNTRY: Self-explanatory from above

screenshot.

·

All the other details are coming from

ledger master.

Sample

Movement analysis report:

This

report is built at Voucher and All Ledger Entries details.

In this

report, the following is the business logic that is given.

o

The total amount is the first

collection field of amount by all ledger entries

o

The taxable amount is the second

collection field by all ledger entries

o

For the IGST amount the software

checks for the IGST entry in 3rd, 4th and 5th

ledgers from voucher details.

o

For the SGST amount the software

checks for the 3rd, 4th and 5th ledgers from

the voucher details

o

For the CGST amount, the software

checks for the 3rd, 4th and 5th ledgers from the voucher

details.

This given

report consolidates 5 different Tally reports as follows:

Explanation

of each field is as follows:

·

Partyledgername: the party to which sales,

purchase, credit note or debit note has been issued

·

GST registration type: this is the

registration type for the second ledger in the voucher as per inventory view.

·

Invoice number

·

Invoice date

·

Ship to: if the voucher has ship to

details then the same shall be given

·

Bill to: if the voucher has ‘bill to’

details then the same shall be given

·

PARTYGSTIN: the GST number for the

party

·

Document number: the reference

document number of the voucher. It could be sales, purchase etc. this is the

parent of the voucher type name

·

Currency

·

Note no: to be extensively used for

the credit notes and debit notes

·

Total: The total invoice amount

·

Taxable: the total taxable amount

·

IGST, SGST, CGST and CESS: self-explanatory

·

GST supply: specifies whether goods or

services

·

Ledger: the second ledger entry in the

voucher

·

BasicPurchaseorderno: specifies the

basic purchase order based on which the sales invoice was generated

·

GST nature: specifies whether

‘taxable’, ‘exempt’ etc.

·

Basicshipvesselno

·

Basicorderref

·

Basicshippingdate

·

Dispatch date

·

Good receipt date

Points to

note:

o

The given module does not have HSN

details

o

The fields GST Nature of Return,

Taxability and nature of services have been set as from the ledger object and

associated collections. For the same the collections GST details, State wise

details and Rate details have been extensively used.

Quick

points to note:

o

If the value are empty then a default

value of “NA” has been put into it.

Batch

Allocation report: This particular report gives the batch wise profitability

across all movement types: sales, purchase, journal debit, journal credit,

debit notes and credit notes

This

report helps consolidate the different movement type reports from sales,

purchase into one single report.

For

example instead of a filtered report as given below:

The end

client shall get a consolidate batch movement report.

The

explanation for the report is as follows:

·

Batch ID: Self explanatory

·

Batch name: Self explanatory

·

Billedqty_purchase: Total quantity

purchased for the given batch to date

·

Billedqty_sale: Total quantity sale

for the given batch and given item to date

·

Batchqty_stock: Total stock journal

debit for the batch and given item up to the given date

·

Batchqty_stock_Jrnl: Total stock

journal credit for the batch and item up

to the given date

·

Debit_Note: Total debit note quantity

for the item and batch up to the given date

·

Credit_Note: Total credit note

quantity for the item and batch up to the given date

·

Net_Difference:Billedqty_purchase-Billedqty_sale+Batchqty_stock-Batchqty_stock_jrnl-debit_Note+Credit_Note

·

Batch_Rate: This is the purchase rate

of the item for the given batch

·

Net_Amount: Multiplication of

Batch_rate with Net_Difference

Prepared by:

Contact: Apoorv Chaturvedi(8802466356)

email:mndatasolutionsindia@gmail.com,support@mndatasolutions.com;support@turbodatatool.com

Website: https://mn-business-intelligence-india.business.site/